Table of Contents

- Notice and Disclaimer

- Foreword

- 1. Scope and Field of Application

- 2. Normative References

- 3. Definitions

- 4. Symbols and Abbreviations

- 5. Conventions

- A. Explanation of Patient Orientation (Normative)

- B. Integration of Modality Worklist and Modality Performed Procedure Step in The Original DICOM Standard (Informative)

- C. Waveforms (Informative)

- D. SR Encoding Example (Informative)

- E. Mammography CAD (Informative)

- F. Chest CAD (Informative)

-

- F.1. Chest CAD SR Content Tree Structure

- F.2. Chest CAD SR Observation Context Encoding

- F.3. Chest CAD SR Examples

-

- F.3.1. Example 1: Lung Nodule Detection With No Findings

- F.3.2. Example 2: Lung Nodule Detection With Findings and Anatomy/pathology Interpretation

- F.3.3. Example 3: Lung Nodule Detection, Temporal Differencing With Findings

- F.3.4. Example 4: Lung Nodule Detection in Chest Radiograph, Spatially Correlated With CT

- G. Explanation of Grouping Criteria For Multi-frame Functional Group IODs (Informative)

- H. Clinical Trial Identification Workflow Examples (Informative)

- I. Ultrasound Templates (Informative)

-

- I.1. SR Content Tree Structure

- I.2. Procedure Summary

- I.3. Multiple Fetuses

- I.4. Explicitly Specifying Calculation Dependencies

- I.5. Linking Measurements to Images, Coordinates

- I.6. Ob Patterns

- I.7. Selected Value

- I.8. OB-GYN Examples

-

- I.8.1. Example 1: OB-GYN Root with Observation Context

- I.8.2. Example 2: OB-GYN Patient Characteristics and Procedure Summary

- I.8.3. Example 3: OB-GYN Multiple Fetus

- I.8.4. Example 4: Biophysical Profile

- I.8.5. Example 5: Biometry Ratios

- I.8.6. Example 6: Biometry

- I.8.7. Example 7: Amniotic Sac

- I.8.8. Example 8: OB-GYN Ovaries

- I.8.9. Example 9: OB-GYN Follicles

- I.8.10. Example 10: Pelvis and Uterus

- J. Handling of Identifying Parameters (Informative)

- K. Ultrasound Staged Protocol Data Management (Informative)

-

- K.1. Purpose of this Annex

- K.2. Prerequisites For Support

- K.3. Definition of a Staged Protocol Exam

- K.4. Attributes Used in Staged Protocol Exams

- K.5. Guidelines

- L. Hemodynamics Report Structure (Informative)

- M. Vascular Ultrasound Reports (Informative)

- N. Echocardiography Procedure Reports (Informative)

-

- N.1. Echo Patterns

- N.2. Measurement Terminology Composition

- N.3. Illustrative Mapping to ASE Concepts

-

- N.3.1. Aorta

- N.3.2. Aortic Valve

- N.3.3. Left Ventricle - Linear

- N.3.4. Left Ventricle Volumes and Ejection Fraction

- N.3.5. Left Ventricle Output

- N.3.6. Left Ventricular Outflow Tract

- N.3.7. Left Ventricle Mass

- N.3.8. Left Ventricle Miscellaneous

- N.3.9. Mitral Valve

- N.3.10. Pulmonary Vein

- N.3.11. Left Atrium / Appendage

- N.3.12. Right Ventricle

- N.3.13. Pulmonic Valve / Pulmonic Artery

- N.3.14. Tricuspid Valve

- N.3.15. Right Atrium / Inferior Vena Cava

- N.3.16. Congenital/Pediatric

- N.4. Encoding Examples

- N.5. IVUS Report

- O. Registration (Informative)

- P. Transforms and Mappings (Informative)

- Q. Breast Imaging Report (Informative)

- R. Configuration Use Cases (Informative)

- S. Legacy Transition For Configuration Management (Informative)

-

- S.1. Legacy Association Requester, Configuration Managed Association Acceptor

- S.2. Managed Association Requester, Legacy Association Acceptor

- S.3. No DDNS Support

- S.4. Partially Managed Devices

- S.5. Adding The First Managed Device to A Legacy Network

- S.6. Switching A Node From Unmanaged to Managed in A Mixed Network

- T. Quantitative Analysis References (Informative)

- U. Ophthalmology Use Cases (Informative)

-

- U.1. Ophthalmic Photography Use Cases

-

- U.1.1. Routine N-spot Exam

- U.1.2. Routine N-spot Exam With Exceptions

- U.1.3. Routine Flourescein Exam

- U.1.4. External Examination

- U.1.5. External Examination With Intention

- U.1.6. External Examination With Drug Application

- U.1.7. Routine Stereo Camera Examination

- U.1.8. Relative Image Position Definitions

- U.2. Typical Sequence of Events

- U.3. Ophthalmic Tomography Use Cases (Informative)

- V. Hanging Protocols (Informative)

- W. Digital Signatures in Structured Reports Use Cases (Informative)

- X. Dictation-based Reporting With Image References (Informative)

- Y. VOI LUT Functions (Informative)

- Z. X-Ray Isocenter Reference Transformations (Informative)

- AA. Radiation Dose Reporting Use Cases (Informative)

- BB. Printing (Informative)

- CC. Storage Commitment (Informative)

- DD. Worklists (Informative)

- EE. Relevant Patient Information Query (Informative)

- FF. CT/MR Cardiovascular Analysis Report Templates (Informative)

- GG. JPIP Referenced Pixel Data Transfer Syntax Negotiation (Informative)

- HH. Segmentation Encoding Example (Informative)

- II. Use of Product Characteristics Attributes in Composite SOP Instances (Informative)

- JJ. Surface Mesh Representation (Informative)

- KK. Use Cases For The Composite Instance Root Retrieval Classes (Informative)

- LL. Example SCU Use of The Composite Instance Root Retrieval Classes (Informative)

- MM. Considerations For Applications Creating New Images From Multi-frame Images

-

- MM.1. Scope

- MM.2. Frame Extraction Issues

-

- MM.2.1. Number of Frames

- MM.2.2. Start and End Times

- MM.2.3. Time Interval versus Frame Increment Vector

- MM.2.4. MPEG-2, MPEG-4 AVC/H.264 or HEVC/H.265

- MM.2.5. JPEG 2000 Part 2 Multi-Component Transform

- MM.2.6. Functional Groups For Enhanced CT, MR, etc.

- MM.2.7. Nuclear Medicine Images

- MM.2.8. A "Single Frame" Multi-frame Image

- MM.3. Frame Numbers

- MM.4. Consistency

- MM.5. Time Synchronization

- MM.6. Audio

- MM.7. Private Attributes

- NN. Specimen Identification and Management

- OO. Structured Display (Informative)

- PP. 3D Ultrasound Volumes (Informative)

- QQ. Enhanced US Data Type Blending Examples (Informative)

-

- QQ.1. Enhanced US Volume Use of the Blending and Display Pipeline

-

- QQ.1.1. Example 1 - Grayscale P-Values Output

- QQ.1.2. Example 2 - Grayscale-only Color Output

- QQ.1.3. Example 3 - Color Tissue (Pseudo-color) Mapping

- QQ.1.4. Example 4 - Fixed Proportion Additive Grayscale Tissue and Color Flow

- QQ.1.5. Example 5 - Threshold Based On Flow_velocity

- QQ.1.6. Example 6 - Threshold Based On Flow_velocity and Flow_variance W/2d Color Mapping

- QQ.1.7. Example 7 - Color Tissue / Velocity / Variance Mapping - Blending Considers Both Data Paths

- RR. Ophthalmic Refractive Reports Use Cases (Informative)

- SS. Colon CAD (Informative)

- TT. Stress Testing Report Template (Informative)

- UU. Macular Grid Thickness and Volume Report Use Cases (Informative)

- VV. Pediatric, Fetal and Congenital Cardiac Ultrasound Reports (Informative)

- WW. Audit Messages (Informative)

- XX. Use Cases for Application Hosting

- YY. Compound and Combined Graphic Objects in Presentation States (Informative)

- ZZ. Implant Template Description

- AAA. Implantation Plan SR Document (Informative)

- BBB. Unified Procedure Step in Radiotherapy (Informative)

-

- BBB.1. Purpose of this Annex

- BBB.2. Use Case Actors

- BBB.3. Use Cases

-

- BBB.3.1. Treatment Delivery Normal Flow - Internal Verification

- BBB.3.2. Treatment Delivery Normal Flow - External Verification

- BBB.3.3. Treatment-delivery With External Verification - Override Or Additional Info Required

- BBB.3.4. Treatment-delivery With External Verification - Machine Adjustment Required

- CCC. Ophthalmic Axial Measurements and Intraocular Lens Calculations Use Cases (Informative)

- DDD. Visual Field Static Perimetry Use Cases (Informative)

- EEE. Intravascular OCT Image (Informative)

- FFF. Enhanced XA/XRF Encoding Examples (Informative)

-

- FFF.1. General Concepts of X-Ray Angiography

-

- FFF.1.1. Time Relationships

- FFF.1.2. Acquisition Geometry

- FFF.1.3. Calibration

- FFF.1.4. X-Ray Generation

- FFF.1.5. Pixel Data Properties and Display Pipeline

- FFF.2. Application Cases

-

- FFF.2.1. Acquisition

-

- FFF.2.1.1. ECG Recording at Acquisition Modality

-

- FFF.2.1.1.1. User Scenario

- FFF.2.1.1.2. Encoding Outline

- FFF.2.1.1.3. Encoding Details

-

- FFF.2.1.1.3.1. Enhanced XA Image

-

- FFF.2.1.1.3.1.1. Synchronization Module Recommendations

- FFF.2.1.1.3.1.2. General Equipment Module Recommendations

- FFF.2.1.1.3.1.3. Cardiac Synchronization Module Recommendations

- FFF.2.1.1.3.1.4. Enhanced XA/XRF Image Module Recommendations

- FFF.2.1.1.3.1.5. Cardiac Synchronization Macro Recommendations

- FFF.2.1.1.3.1.6. Frame Content Macro Recommendations

- FFF.2.1.1.3.2. General ECG Object

- FFF.2.1.1.4. Examples

- FFF.2.1.2. Multi-modality Waveform Synchronization

-

- FFF.2.1.2.1. Both Modalities Synchronized Via NTP

- FFF.2.1.2.2. One Modality Sends Trigger to The Other Modality

- FFF.2.1.3. Mechanical Movement

-

- FFF.2.1.3.1. Rotational Acquisition

- FFF.2.1.3.2. Peripheral/stepping Acquisition

- FFF.2.1.4. Changes in X-Ray Controls

- FFF.2.1.5. Image Detector and Field of View

-

- FFF.2.1.5.1. User Scenario

- FFF.2.1.5.2. Encoding Outline

- FFF.2.1.5.3. Encoding Details

-

- FFF.2.1.5.3.1. XA/XRF Acquisition Module Recommendations

- FFF.2.1.5.3.2. X-Ray Image Intensifier Module Recommendations

- FFF.2.1.5.3.3. X-Ray Detector Module Recommendations

- FFF.2.1.5.3.4. X-Ray Field of View Macro Recommendations

- FFF.2.1.5.3.5. XA/XRF Frame Pixel Data Properties Macro Recommendations

- FFF.2.1.5.4. Examples

- FFF.2.1.6. Acquisitions With Contrast

- FFF.2.1.7. Acquisition Parameters For X-Ray Generation (kVp, mA, …)

- FFF.2.2. Review

- FFF.2.3. Display

-

- FFF.2.3.1. Standard Pipeline With Enhanced XA

-

- FFF.2.3.1.1. User Scenario

- FFF.2.3.1.2. Encoding Outline

- FFF.2.3.1.3. Encoding Details

-

- FFF.2.3.1.3.1. Enhanced XA/XRF Image Module Recommendations

- FFF.2.3.1.3.2. XA/XRF Multi-frame Presentation Module Recommendations

- FFF.2.3.1.3.3. Frame VOI LUT Macro Recommendations

- FFF.2.3.1.3.4. Pixel Intensity Relationship LUT Macro Recommendations

- FFF.2.3.1.3.5. XA/XRF Frame Pixel Data Properties Macro Recommendations

- FFF.2.3.1.4. Example

- FFF.2.3.2. Mask Subtraction

- FFF.2.3.3. Pixel-shift

- FFF.2.4. Processing

-

- FFF.2.4.1. Projection Pixel Calibration

- FFF.2.4.2. Image Derivation and Pixel Data Properties

-

- FFF.2.4.2.1. User Scenario

- FFF.2.4.2.2. Encoding Outline

- FFF.2.4.2.3. Encoding Details

-

- FFF.2.4.2.3.1. Enhanced XA/XRF Image Module Recommendations

- FFF.2.4.2.3.2. Derivation Image Macro Recommendations

- FFF.2.4.2.3.3. Pixel Intensity Relationship LUT Macro Recommendations

- FFF.2.4.2.3.4. XA/XRF Frame Characteristics Macro Recommendations

- FFF.2.4.2.3.5. XA/XRF Frame Pixel Data Properties Macro Recommendations

- FFF.2.4.2.4. Examples

- FFF.2.5. Registration

-

- FFF.2.5.1. Tracking An Object of Interest On Multiple 2d Images

-

- FFF.2.5.1.1. User Scenario

- FFF.2.5.1.2. Encoding Outline

- FFF.2.5.1.3. Encoding Details

-

- FFF.2.5.1.3.1. Image Pixel Module Recommendations

- FFF.2.5.1.3.2. XA/XRF Acquisition Module Recommendations

- FFF.2.5.1.3.3. X-Ray Detector Module Recommendations

- FFF.2.5.1.3.4. X-Ray Field of View Macro Recommendations

- FFF.2.5.1.3.5. X-Ray Isocenter Reference System Macro Recommendations

- FFF.2.5.1.3.6. X-Ray Geometry Macro Recommendations

- FFF.2.5.1.3.7. XA/XRF Frame Pixel Data Properties Macro Recommendations

- FFF.2.5.1.4. Example

- GGG. Unified Worklist and Procedure Step - UPS (Informative)

- HHH. Transition from WADO to RESTful Services (Informative)

-

- HHH.1. Request and Response Parameters

- HHH.2. Web Services Implementation

- HHH.3. Uses for Web Services

-

- HHH.3.1. General Requirements

- HHH.3.2. Analysis of Use Cases

- HHH.3.3. Description of The Use Cases

-

- HHH.3.3.1. URI Based WADO Use Case

- HHH.3.3.2. DICOM (Encoded Content) Requester

- HHH.3.3.3. Rendered (JPEG/PDF) Requester

- HHH.3.3.4. Metadata (XML Without Pixel Data, Waveform Data, etc.) Requester

- HHH.3.3.5. DICOM Requester

- HHH.3.3.6. Frame Pixel Data Requester

- HHH.3.3.7. Bulk Data Requester

- HHH.3.3.8. Metadata Requester

- HHH.3.3.9. DICOM Creator

- HHH.3.3.10. Metadata and Bulk Data Creator

- HHH.4. Uses For QIDO Services

- HHH.5. Retired

- HHH.6. Retired

- HHH.7. Uses for Server Options Services

- III. Ophthalmic Thickness Map Use Cases (Informative)

- JJJ. Optical Surface Scan

-

- JJJ.1. General Information

- JJJ.2. One Single Shot Without Texture Acquisition As Point Cloud

- JJJ.3. One Single Shot With Texture Acquisition As Mesh

- JJJ.4. Storing Modified Point Cloud With Texture As Mesh

- JJJ.5. Multishot Without Texture As Point Clouds and Merged Mesh

- JJJ.6. Multishot With Two Texture Per Point Cloud

- JJJ.7. Using Colored Vertices Instead of Texture

- JJJ.8. 4D Surface Data Analysis

- JJJ.9. Referencing A Texture From Another Series

- KKK. Use-cases For Conversion of Classic Single Frame Images to Legacy Converted Enhanced Multi-frame Images (Informative)

-

- KKK.1. Introduction

- KKK.2. Enhanced Legacy Converted Image Storage IODs

- KKK.3. Heterogeneous Environment

- KKK.4. Compatibility With Modality Association Negotiation

- KKK.5. Query and Retrieval

- KKK.6. Referential Integrity

- KKK.7. Persistence and Determinism

- KKK.8. Source References

- KKK.9. Uncertainty Principle

- LLL. Conversion of Single Frame Images to Legacy Converted Enhanced Multi-frame Images (Informative)

- MMM. Query and Retrieval of Legacy Converted Enhanced Multi-frame Images (Informative)

- NNN. Corneal Topography and Tomography Maps (Informative)

- OOO. Radiopharmaceutical Radiation Dose Structured Report (Informative)

- PPP. Examples of Communication of Display Parameters (Informative)

- QQQ. Parametric Maps (Informative)

- RRR. Measurement Report SR Document for Planar and Volumetric ROI (Informative)

-

- RRR.1. Measurement Report SR Document Volumetric ROI on CT Example

- RRR.2. Measurement Report SR Document Volumetric ROI on CT Example

- RRR.3. Measurement Report SR Document Planar ROI on DCE-MR Tracer Kinetic Model Example

- RRR.4. Measurement Report SR Document Volumetric and SUV ROI on FDG PET Example

- SSS. Use of Image Libraries in SR Documents (Informative)

- TTT. X-Ray 3D Angiographic Image Encoding Examples (Informative)

-

- TTT.1. General Concepts of X-Ray 3D Angiography

- TTT.2. Application Cases

-

- TTT.2.1. Case #1: One Rotation, One 2D Instance, One Reconstruction, One X-Ray 3D Instance

-

- TTT.2.1.1. User Scenario

- TTT.2.1.2. Encoding Outline

- TTT.2.1.3. Encoding Details

-

- TTT.2.1.3.1. X-Ray 3D Angiographic Image IOD

-

- TTT.2.1.3.1.1. General and Enhanced Series Modules Recommendations

- TTT.2.1.3.1.2. Frame of Reference Module Recommendations

- TTT.2.1.3.1.3. General and Enhanced General Equipment Modules Recommendations

- TTT.2.1.3.1.4. Image Pixel Module Recommendations

- TTT.2.1.3.1.5. Enhanced Contrast/Bolus Module Recommendations

- TTT.2.1.3.1.6. Multi-frame Dimensions Module Recommendations

- TTT.2.1.3.1.7. Patient Orientation Module Recommendations

- TTT.2.1.3.1.8. X-Ray 3D Image Module Recommendations

- TTT.2.1.3.1.9. X-Ray 3D Angiographic Image Contributing Sources Module Recommendations

- TTT.2.1.3.1.10. X-Ray 3D Angiographic Acquisition Module Recommendations

- TTT.2.1.3.1.11. Pixel Measures Macro Recommendations

- TTT.2.1.3.1.12. Frame Content Macro Recommendations

- TTT.2.1.3.1.13. Derivation Image Macro Recommendations

- TTT.2.1.3.1.14. Frame Anatomy Macro Recommendations

- TTT.2.1.3.1.15. X-Ray 3D Frame Type Macro Recommendations

- TTT.2.1.4. Example

- TTT.2.2. Case #2: Reconstruction From A Sub-set of Projection Frames

- TTT.2.3. Case #3: Reconstruction From A Sub-region of All Image Frames

-

- TTT.2.3.1. User Scenario

- TTT.2.3.2. Encoding Outline

- TTT.2.3.3. Encoding Details

-

- TTT.2.3.3.1. X-Ray 3D Angiographic Image IOD

-

- TTT.2.3.3.1.1. Frame of Reference Module Recommendations

- TTT.2.3.3.1.2. Pixel Measures Macro Recommendations

- TTT.2.3.3.1.3. Plane Position (Patient) Macro Recommendations

- TTT.2.3.3.1.4. Plane Orientation (Patient) Macro Recommendations

- TTT.2.3.3.1.5. Frame Content Macro Recommendations

- TTT.2.3.3.1.6. Frame Anatomy Macro Recommendations

- TTT.2.3.4. Example

- TTT.2.4. Case #4: Multiple Rotations, One Or More 2D Instances, One Reconstruction, One X-Ray 3D Instance

- TTT.2.5. Case #5: One Rotation, One 2D Instance, Multiple Reconstructions, One X-Ray 3D Instance

-

- TTT.2.5.1. User Scenario

- TTT.2.5.2. Encoding Outline

- TTT.2.5.3. Encoding Details

-

- TTT.2.5.3.1. 2D X-Ray Angiographic Image IOD

- TTT.2.5.3.2. X-Ray 3D Angiographic Image IOD

-

- TTT.2.5.3.2.1. Image Pixel Module Recommendations

- TTT.2.5.3.2.2. Multi-frame Dimension Module Recommendations

- TTT.2.5.3.2.3. X-Ray 3D Angiographic Acquisition Module Recommendations

- TTT.2.5.3.2.4. X-Ray 3D Reconstruction Module Recommendations

- TTT.2.5.3.2.5. Frame Content Macro Recommendations

- TTT.2.5.3.2.6. Cardiac Synchronization Macro Recommendations

- TTT.2.5.3.2.7. X-Ray 3D Frame Type Macro Recommendations

- TTT.2.5.4. Example

- TTT.2.6. Case #6: Two Rotations, Two 2D Instances, Two Reconstructions, Two X-Ray 3D Instances

- TTT.2.7. Case #7: Spatial Registration of 3D X-Ray Angiography With Enhanced XA

- UUU. Ophthalmology Use Cases (Informative)

-

- UUU.1. Wide Field Ophthalmic Use Cases

- UUU.2. Relationship Between Ophthalmic Tomography Image and Ophthalmic Optical Coherence Tomography B-scan Volume Analysis IODs

- UUU.3. Ophthalmic Tomography Angiography Examples

- VVV. Segmentation of Images of Groups of Animals (Informative)

- WWW. Tractography Results (Informative)

- XXX. Volumetric Presentation States (Informative)

-

- XXX.1. Scope of Volumetric Presentation States

- XXX.2. Volumetric Presentation States vs. Static Derived Images

- XXX.3. Use Cases

-

- XXX.3.1. Simple Planar MPR View

- XXX.3.2. Spatially Related Views (e.g., Orthogonal)

- XXX.3.3. Replacing Set of Derived Images with Multiple Volumetric Presentation States

- XXX.3.4. Replacing Set of Derived Images With Single VPS Using Crosscurve Animation

- XXX.3.5. Volumetric Annotations (example: Trajectory Planning)

- XXX.3.6. Highlighting Areas of Interest in MPR View

- XXX.3.7. Ultrasound Color Flow MPR

- XXX.3.8. Blending with Functional Data, e.g., PET/CT or Perfusion Data

- XXX.3.9. Stent Stabilization

- XXX.3.10. Highlighting Areas of Interest in Volume Rendered View

-

- XXX.3.10.1. User Scenario

- XXX.3.10.2. Encoding Outline

- XXX.3.10.3. Encoding Details

-

- XXX.3.10.3.1. Volume Presentation State Relationship Module Recommendations

- XXX.3.10.3.2. Volume Render Geometry Module Recommendations

- XXX.3.10.3.3. Render Shading Module Recommendations

- XXX.3.10.3.4. Render Display Module Recommendations

- XXX.3.10.3.5. Volumetric Graphic Annotation Module Recommendations

- XXX.3.10.3.6. Graphic Layer Module Recommendations

- XXX.3.11. Colorized Volume Rendering of Segmented Volume Data

- XXX.3.12. Liver Resection Planning

- XXX.4. Uses of Presentation View Description in the Identification Module

- XXX.5. Compositing and the Use of Weighting Transfer Functions

- XXX.6. Usage of the Classification and Compositing Components

- YYY. Preclinical Small Animal Imaging Acquisition Context (Informative)

- ZZZ. Content Assessment (Informative)

- AAAA. Protocol Storage Examples and Concepts (informative)

- BBBB. Color information for Parametric Object (Informative)

- CCCC. Populating The Simplified Echo Procedure Report Template (Informative)

- DDDD. Types of Echocardiography Measurement Specifications (Informative)

- EEEE. Encoding Diffusion Model Parameters for Parametric Maps and ROI Measurements (Informative)

-

- EEEE.1. Encoding Diffusion Model Parameters for Parametric Maps

- EEEE.2. Encoding Diffusion Model Parameters for ROIs in Measurement Report SR Documents

- EEEE.3. Relationship of Derived Diffusion Model Parametric Maps to Diffusion Weighted Source Images

- EEEE.4. Image and Frame of Derived Diffusion Model Parametric Maps

- EEEE.5. Informative References

- FFFF. Advanced Blending Presentation State Storage Encoding Example (Informative)

- GGGG. Patient Radiation Dose Structured Report Document (Informative)

- HHHH. Protocol Approval Examples and Concepts (Informative)

- IIII. Encapsulated STL (Informative)

List of Figures

- A-1. Standard Anatomic Position Directions - Whole Body

- A-2. Standard Anatomic Position Directions - Hand

- A-3. Standard Anatomic Position Directions - Foot

- A-4. Views - Anterior and Lateral

- A-5. Planes - Whole Body - Transverse

- A-6. Planes - Whole Body - Sagittal

- A-7. Planes - Whole Body - Coronal

- A-8. Planes - Hand

- A-9. Planes - Double Obliquity

- A-10. Standard Anatomic Position Directions - Paired Hands

- A-11. Breast - MedioLateral Oblique

- A-12. Panoramic Zonogram Directions

- B-1. Functional View - Modality Worklist and Modality Performed Procedure Step Management in the Context of DICOM Service Classes

- B-2. Relationship of the Original Model and the Extensions for Modality Worklist and Modality Performed Procedure Step Management

- C.4-1. Waveform Acquisition Model

- C.5-1. DICOM Waveform Information Model

- E.1-1. Top Levels of Mammography CAD SR Content Tree

- E.1-2. Summary of Detections and Analyses Levels of Mammography CAD SR Content Tree

- E.1-3. Example of Individual Impression/Recommendation Levels of Mammography CAD SR Content Tree

- E.2-1. Example of Use of Observation Context

- E.3-1. Mammograms as Described in Example 1

- E.3-2. Mammograms as Described in Example 2

- E.3-3. Content Tree Root of Example 2 Content Tree

- E.3-4. Image Library Branch of Example 2 Content Tree

- E.3-5. CAD Processing and Findings Summary Bifurcation of Example 2 Content Tree

- E.3-6. Individual Impression/Recommendation 1.2.1 from Example 2 Content Tree

- E.3-7. Single Image Finding Density 1.2.1.2.6 from Example 2 Content Tree

- E.3-8. Single Image Finding Density 1.2.1.2.7 from Example 2 Content Tree

- E.3-9. Individual Impression/Recommendation 1.2.2 from Example 2 Content Tree

- E.3-10. Individual Impression/Recommendation 1.2.3 from Example 2 Content Tree

- E.3-11. Individual Impression/Recommendation 1.2.4 from Example 2 Content Tree

- E.3-12. Single Image Finding 1.2.4.2.7 from Example 2 Content Tree

- E.3-13. Single Image Finding 1.2.4.2.8 from Example 2 Content Tree

- E.3-14. Summary of Detections Branch of Example 2 Content Tree

- E.3-15. Summary of Analyses Branch of Example 2 Content Tree

- E.3-16. Mammograms as Described in Example 3

- E.4-1. Free-response Receiver-Operating Characteristic (FROC) curve

- F.1-1. Top Levels of Chest CAD SR Content Tree

- F.1-2. Example of CAD Processing and Findings Summary Sub-Tree of Chest CAD SR Content Tree

- F.2-1. Example of Use of Observation Context

- F.3-1. Chest Radiograph as Described in Example 1

- F.3-2. Chest Radiograph as Described in Example 2

- F.3-3. Content Tree Root of Example 2 Content Tree

- F.3-4. Image Library Branch of Example 2 Content Tree

- F.3-5. CAD Processing and Findings Summary Portion of Example 2 Content Tree

- F.3-6. Summary of Detections Portion of Example 2 Content Tree

- F.3-8. Chest radiographs as Described in Example 3

- F.3-9. Chest Radiograph and CT slice as described in Example 4

- H-1. Workflow Diagram for Clinical Trials

- I.1-1. Top Level Structure of Content Tree

- I.3-1. Multiple Fetuses

- I.4-1. Explicit Dependencies

- I.5-1. Relationships to Images and Coordinates

- I.6-1. OB Numeric Biometry Measurement group Example

- I.6-2. Percentile Rank or Z-score Example

- I.6-3. Estimated Fetal Weight

- I.7-1. Selected Value Example

- I.7-2. Selected Value with Mean Example

- I.8-1. Ovaries Example

- I.8-2. Follicles Example

- K.3-1. Cardiac Stress-Echo Staged Protocol US Exam

- K.5.5-1. Example of Uninterrupted Staged-Protocol Exam WORKFLOW

- K.5.5-2. Example Staged-Protocol Exam with Unscheduled Follow-up Stages

- K.5.5-3. Example Staged-Protocol Exam with Scheduled Follow-up Stages

- L-1. Hemodynamics Report Structure

- M.2-1. Vascular Numeric Measurement Example

- N.1-1. Top Level Structure of Content

- N.1-2. Echocardiography Measurement Group Example

- N.5-1. IVUS Report Structure

- O.1-1. Registration of Image SOP Instances

- O.3-1. Stored Registration System Interaction

- O.3-2. Interaction Scenario

- O.3-3. Coupled Modalities

- O.4-1. Spatial Registration Encoding

- O.4-2. Deformable Spatial Registration Encoding

- O.4-3. Spatial Fiducials Encoding

- Q.1-1. Top Level of Breast Imaging Report Content Tree

- Q.1-2. Breast Imaging Procedure Reported Content Tree

- Q.1-3. Breast Imaging Report Narrative Content Tree

- Q.1-4. Breast Imaging Report Supplementary Data Content Tree

- Q.1-5. Breast Imaging Assessment Content Tree

- R.1-1. System Installation with Pre-configured Configuration

- R.1-2. Configuring a System when network LDAP updates are permitted

- R.1-3. Configuring a system when LDAP network updates are not permitted

- R.4-1. Configured Device Start up (Normal Start up)

- T.1-1. Definition of Left and Right in the Case of Quantitative Arterial Analysis

- T.2-1. Definition of Diameter Symmetry with Arterial Plaques

- T.3-1. Landmark Based Wall Motion Regions

- T.3-2. Example of Centerline Wall Motion Template Usage

- T.3-3. Radial Based Wall Motion Region

- T.5-1. Artery Horizontal

- T.5-2. Artery 45º Angle

- U.1.8-1. Anatomical Landmarks and References of the Left Ocular Fundus

- U.2-1. Typical Sequence of Events

- U.3-1. Schematic representation of the human eye

- U.3-2. Tomography of the anterior segment showing a cross section through the cornea

- U.3-3. Example tomogram of the retinal nerve fiber layer with a corresponding fundus image

- U.3-4. Example of a macular scan showing a series of B-scans collected at six different angles

- U.3-5. Example 3D reconstruction

- U.3-6. Longitudinal OCT Image with Reference Image (inset)

- U.3-7. Superimposition of Longitudinal Image on Reference Image

- U.3-8. Transverse OCT Image

- U.3-9. Correlation between a Transverse OCT Image and a Reference Image Obtained Simultaneously

- U.3-10. Correspondence between Reconstructed Transverse and Longitudinal OCT Images

- U.3-11. Reconstructed Transverse and Side Longitudinal Images

- V.1-1. Spatial layout of screens for workstations in Example Scenario

- V.1-2. Sequence diagram for Example Scenario

- V.2-1. Hanging Protocol Internal Process Model

- V.2-2. Example Process Flow

- V.3-1. Chest X-Ray Hanging Protocol Example

- V.4-1. Neurosurgery Planning Hanging Protocol Example

- V.4.3-1. Group #1 is CT only display (current CT)

- V.4.3-2. Group #2 is MR only display

- V.4.3-3. Group #3 is combined MR & CT

- V.4.3-4. Group #4 is combined CT new & CT old

- V.6-1. Display Set Patient Orientation Example

- X.1-1. Dictation/Transcription Reporting Data Flow

- X.1-2. Reporting Data Flow with Image References

- X.1-3. Reporting Data Flow with Image and Presentation/Annotation References

- X.2-1. Transcribed Text Content Tree

- X.2-2. Inputs to SR Basic Text Object Content Tree

- X.3-1. CDA Section with DICOM Object References

- Y-1. Linear Window Center and Width

- Y-2. H-D Curve

- Y-3. Sigmoid LUT

- Z-1. Coordinates of a Point "P" in the Isocenter and Table coordinate systems

- AA.3-1. Basic Dose Reporting

- AA.3-2. Dose Reporting for Non-Digital Imaging

- AA.3-3. Dose Reporting Post-Processing

- CC.1-1. Example of Storage Commitment Push Model SOP Class

- CC.1-3. Example of Remote Storage of SOP Instances

- CC.1-4. Example of Storage Commitment in Conjunction with Storage Media

- DD.1-1. Modality Worklist Message Flow Example

- FF.1-1. Top Level Structure of Content Tree

- FF.2-1. CT/MR Cardiovascular Analysis Report

- FF.2-2. Vascular Morphological Analysis

- FF.2-3. Vascular Functional Analysis

- FF.2-4. Ventricular Analysis

- FF.2-5. Vascular Lesion

- HH-1. Segment Sequence Structure and References

- JJ.2-1. Surface Mesh Tetrahedron

- NN.3-1. Extension of DICOM E-R Model for Specimens

- NN.4-1. Sampling for one specimen per container

- NN.4-2. Container with two specimens from same parent

- NN.4-3. Sampling for two specimens from different ancestors

- NN.4-4. Two specimens smears on one slide

- NN.4-5. Sampling for TMA Slide

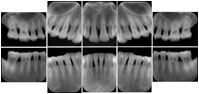

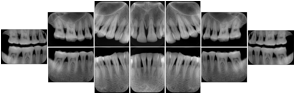

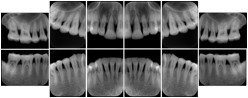

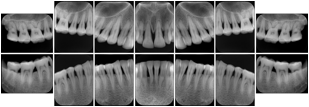

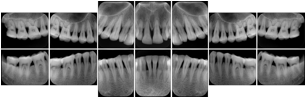

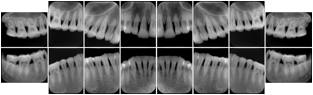

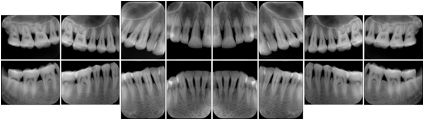

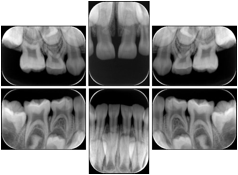

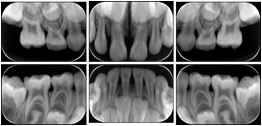

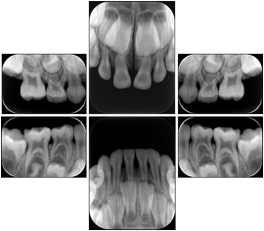

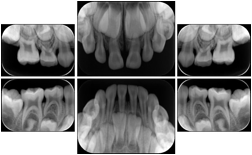

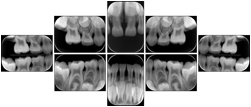

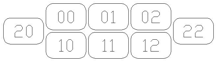

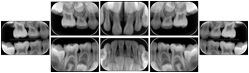

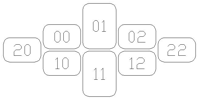

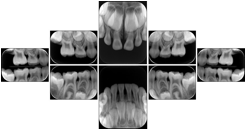

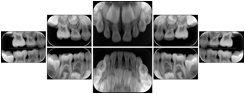

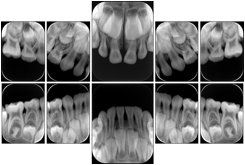

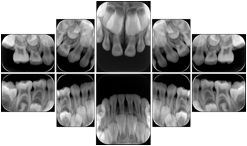

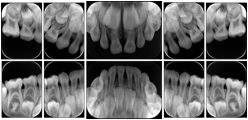

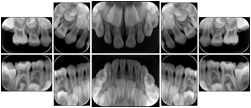

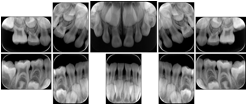

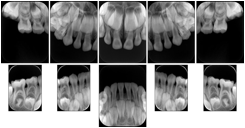

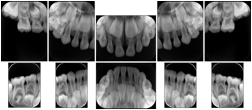

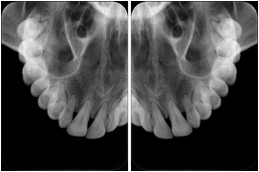

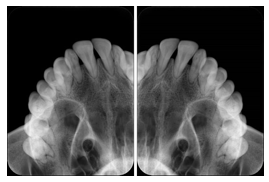

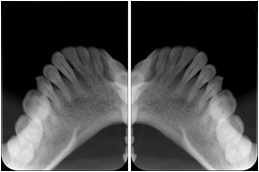

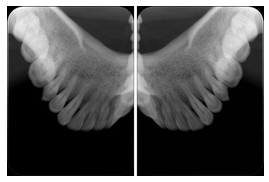

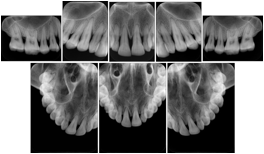

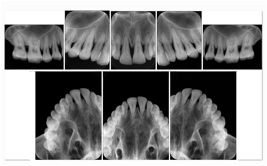

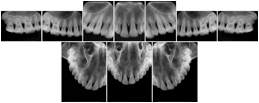

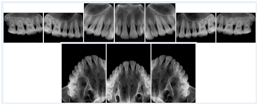

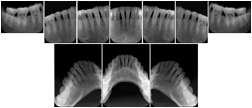

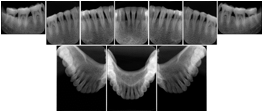

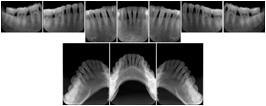

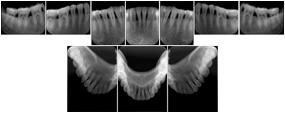

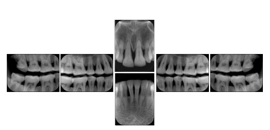

- OO-1. Intra-oral Full Mouth Series Structured Display

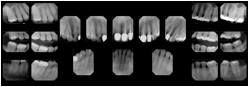

- OO-2. Cephalometric Series Structured Display

- OO-3. Ophthalmic Retinal Study Structured Display

- OO-4. OCT Retinal Study with Cross Section and Navigation Structured Display

- OO-5. Stress Echocardiography Structured Display

- OO-6. Stress-Rest Nuclear Cardiography Structured Display

- OO-7. Mammography Structured Display

- PP.3-1. Types of 3D Ultrasound Source and Derived Images

- QQ.1-1. Example 1

- QQ.1-2. Example 2

- QQ.1-3. Example 3

- QQ.1-4. Example 4

- QQ.1-5. Example 5

- QQ.1-6. Example 6

- QQ.1-7. Example 7

- SS.1-1. Top Levels of Colon CAD SR Content Tree

- SS.2-1. Example of Use of Observation Context

- SS.3-1. Colon Radiograph as Described in Example 1

- SS.3-2. Colon radiograph as Described in Example 2

- SS.3-3. Content Tree Root of Example 2 Content Tree

- SS.3-4. CAD Processing and Findings Summary Portion of Example 2 Content Tree

- SS.3-5. Summary of Detections Portion of Example 2 Content Tree

- SS.3-7. Colon radiographs as Described in Example 3

- TT-1. Stress Testing Report Template

- UU.3-1. OPT B-scan with Layers and Boundaries Identified

- UU.5-1. Macular Grid Thickness Report Display Example

- UU.5-2. - ETDRS GRID Layout

- VV.1-1. Top Level Structure of Content

- VV.2-1. Pediatric, Fetal and Congenital Cardiac Ultrasound Measurement Group Example

- YY-1. Compound Graphic 'AXIS'

- YY-2. Combined Graphic Object 'DistanceLine'

- ZZ.1-1. Implant Template Mating (Example).

- ZZ.1-2. Implant Template Mating Feature IDs (Example)

- ZZ.1-3. 2D Mating Feature Coordinates Sequence (Example).

- ZZ.1-4. Implant Assembly Template (Example)

- ZZ.3-1. Implant Templates used in the Example.

- ZZ.3-2. Cup is Aligned with Patient's Acetabulum using 2 Landmarks

- ZZ.3-3. Stem is Aligned with Patient's Femur.

- ZZ.3-4. Femoral and Pelvic Side are Registered.

- ZZ.3-5. Rotational Degree of Freedom

- ZZ.5-1. Implant Versions and Derivation.

- AAA.1-1. Implantation Plan SR Document basic content tree

- AAA.2-1. Implantation Plan SR Document and Implant Template Relationship Diagram

- AAA.3-1. Total Hip Replacement Components

- AAA.4-1. Spatial Relations of Implant, Implant Template, Bite Plate and Patient CT

- BBB.3.1.1-1. Treatment Delivery Normal Flow - Internal Verification Message Sequence

- BBB.3.2.1-1. Treatment Delivery Normal Flow - External Verification Message Sequence

- BBB.3.3.1-1. Treatment Delivery Message Sequence - Override or Additional Information Required

- BBB.3.4.1-1. Treatment Delivery Message Sequence - Machine Adjustment Required

- CCC.2-1. Sagittal Diagram of Eye Anatomy (when the lens turns opaque it is called a cataract)

- CCC.2-2. Eye with a cataract

- CCC.2-3. Eye with Synthetic Intraocular Lens Placed After Removal of Cataract

- CCC.3-1. Scan Waveform Example

- CCC.4-1. Waveform Output of a Partial Coherence Interferometry (PCI) Device Example

- CCC.5-1. IOL Calculation Results Example

- DDD.2-1. Schematic Representation of the Human Eye

- DDD.2-2. Sample Report from an Automated Visual Field Machine

- DDD.2-3. Information Related to Test Reliability

- DDD.2-4. Sample Output from an Automated VF Machine Including Raw Sensitivity Values (Left, Larger Numbers are Better) and an Interpolated Gray-Scale Image

- DDD.2-5. Examples of Age Corrected Deviation from Normative Values (upper left) and Mean Defect Corrected Deviation from Normative Data (upper right)

- DDD.2-6. Example of Visual Field Loss Due to Damage to the Occipital Cortex Because of a Stroke

- DDD.2-7. Example of Diffuse Defect

- DDD.2-8. Example of Local Defect

- EEE.2-1. Z Offset Correction

- EEE.2-2. Polar to Cartesian Conversion

- EEE.3-1. IVUS Image with Vertical Longitudinal View

- EEE.3-2. IVOCT Image with Horizontal Longitudinal View

- EEE.3-3. Longitudinal Reconstruction

- FFF.1.1-1. Time Relationships of a Multi-frame Image

- FFF.1.1-2. Time Relationships of one Frame

- FFF.1.2-1. Acquisition Steps Influencing the Geometrical Relationship Between the Patient and the Pixel Data

- FFF.1.2-2. Point P Defined in the Patient Orientation

- FFF.1.2-3. Table Coordinate System

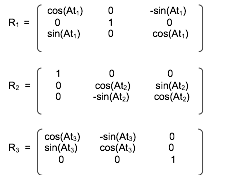

- FFF.1.2-4. At1: Table Horizontal Rotation Angle

- FFF.1.2-5. At2: Table Head Tilt Angle

- FFF.1.2-6. At3: Table Cradle Tilt Angle

- FFF.1.2-7. Point P in the Table and Isocenter Coordinate Systems

- FFF.1.2-8. Projection of a Point of the Positioner Coordinate System

- FFF.1.2-9. Physical Detector and Field of View Areas

- FFF.1.2-10. Field of View Image

- FFF.1.2-11. Examples of Field of View Rotation and Horizontal Flip

- FFF.1.4-1. Example of X-Ray Current Per-Frame of the X-Ray Acquisition

- FFF.1.5-1. Examples of Image Processing prior to the Pixel Data Storage

- FFF.1.5-2. Example of Manufacturer-Dependent Subtractive Pipeline with Enhanced XA

- FFF.2.1-1. Scenario of ECG Recording at Acquisition Modality

- FFF.2.1-2. Example of ECG Recording at Acquisition Modality

- FFF.2.1-3. Attributes of ECG Recording at Acquisition Modality

- FFF.2.1-4. Example of ECG information in the Enhanced XA image

- FFF.2.1-5. Attributes of Cardiac Synchronization in ECG Recording at Acquisition Modality

- FFF.2.1-6. Scenario of Multi-modality Waveform Synchronization

- FFF.2.1-7. Example of Multi-modality Waveform Synchronization

- FFF.2.1-8. Attributes of Multi-modality Waveform NTP Synchronization

- FFF.2.1-9. Scenario of Multi-modality Waveform Synchronization

- FFF.2.1-10. Example of Image Modality as Source of Trigger

- FFF.2.1-11. Attributes when Image Modality is the Source of Trigger

- FFF.2.1-12. Example of Waveform Modality as Source of Trigger

- FFF.2.1-13. Attributes when Waveform Modality is the Source of Trigger

- FFF.2.1-14. Detector Trajectory during Rotational Acquisition

- FFF.2.1-15. Attributes of X-Ray Positioning Per-frame on Rotational Acquisition

- FFF.2.1-16. Table Trajectory during Table Stepping

- FFF.2.1-17. Example of table positions per-frame during table stepping

- FFF.2.1-18. Attributes of the X-Ray Table Per Frame on Table Stepping

- FFF.2.1-19. Example of X-Ray Exposure Control Sensing Regions inside the Pixel Data matrix

- FFF.2.1-20. Attributes of the First Example of the X-Ray Exposure Control Sensing Regions

- FFF.2.1-21. Example of X-Ray Exposure Control Sensing Regions partially outside the Pixel Data matrix

- FFF.2.1-22. Attributes of the Second Example of the X-Ray Exposure Control Sensing Regions

- FFF.2.1-23. Schema of the Image Intensifier

- FFF.2.1-24. Generation of the Stored Image from the Detector Matrix

- FFF.2.1-25. Attributes of the Example of Field of View on Image Intensifier

- FFF.2.1-26. Attributes of the First Example of Field of View on Digital Detector

- FFF.2.1-27. Attributes of the Second Example of Field of View on Digital Detector

- FFF.2.1-28. Attributes of the Third Example of Field of View on Digital Detector

- FFF.2.1-29. Example of contrast agent injection

- FFF.2.1-30. Attributes of Contrast Agent Injection

- FFF.2.2-1. Attributes of the Example of the Variable Frame-rate Acquisition with Skip Frames

- FFF.2.3-1. Example of usage of Photometric Interpretation

- FFF.2.3-2. Attributes of Mask Subtraction and Display

- FFF.2.3-3. Example of Shared Frame Pixel Shift Macro

- FFF.2.3-4. Example of Per-Frame Frame Pixel Shift Macro

- FFF.2.3-5. Example of Per-Frame Frame Pixel Shift Macro for Multiple Shifts

- FFF.2.4-1. Attributes of X-Ray Projection Pixel Calibration

- FFF.2.4-2. Example of various successive derivations

- FFF.2.4-3. Attributes of the Example of Various Successive Derivations

- FFF.2.4-4. Example of Derivation by Square Root Transformation

- FFF.2.4-5. Attributes of the Example of Derivation by Square Root Transformation

- FFF.2.5-1. Attributes of the example of tracking an object of interest on multiple 2D images

- GGG.2-1. Diagram of Typical Pull Workflow

- GGG.3-1. Diagram of Reporting Workflow

- GGG.4-1. Diagram of Third Party Cancel

- GGG.5-1. Diagram of Radiation Therapy Planning Push Workflow

- GGG.5-2. Diagram of Remote Monitoring and Cancel

- GGG.6-1. Diagram of X-Ray Clinic Push Workflow

- III.2-1. Macular Example Mapping

- III.3-1. RNFL Example Mapping

- III.4-1. Macula Edema Thickness Map Example

- III.4-2. Macula Edema Probability Map Example

- III.6-1. Observable Layer Structures

- JJJ.1-1. Optical Surface Scan Relationships

- JJJ.2-1. One Single Shot Without Texture Acquisition As Point Cloud

- JJJ.3-1. One Single Shot With Texture Acquisition As Mesh

- JJJ.4-1. Storing Modified Point Cloud With Texture As Mesh

- JJJ.5-1. Multishot Without Texture As Point Clouds and Merged Mesh

- JJJ.6-1. Multishot With Two Texture Per Point Cloud

- JJJ.7-1. Using Colored Vertices Instead of Texture

- JJJ.9-1. Referencing A Texture From Another Series

- KKK-1. Heterogeneous environment with conversion between single and multi-frame objects

- NNN.2-1. Scale and Color Palette for Corneal Topography Maps

- NNN.3-1. Placido Ring Image Example

- NNN.3-2. Corneal Topography Axial Power Map Example

- NNN.3-3. Corneal Topography Instantaneous Power Map Example

- NNN.3-4. Corneal Topography Refractive Power Map Example

- NNN.3-5. Corneal Topography Height Map Example

- NNN.4-1. Contact Lens Fitting Simulation Example

- NNN.5-1. Corneal Axial Topography Map of keratoconus (left) with its Wavefront Map showing higher order (HO) aberrations (right)

- OOO-1. Workflow for a "Typical" Nuclear Medicine or PET Department

- OOO-2. Hot Lab Management System as the RRD Creator

- OOO-3. Workflow for a Non-imaging Procedure

- OOO-4. Workflow for an Infusion System or a Radioisotope Generator

- OOO-5. UML Sequence Diagram for Typical Workflow

- OOO-6. UML Sequence Diagram for when Radiopharmaceutical and the Modality are Started at the Same Time

- OOO-7. Radiopharmaceutical and Radiopharmaceutical Component Identification Relationship

- PPP.2.1-1. Example of System Status and Configuration Message Sequencing

- PPP.3.1-1. A Typical Display System

- PPP.3.2-1. A Tablet Display System

- TTT.1.1-1. Process flow of the X-Ray 3D Angiographic Volume Creation

- TTT.1.2-1. Relationship between the creation of 2D and 3D Instances

- TTT.2.1-1. Encoding of a 3D reconstruction from all the frames of a rotational acquisition

- TTT.2.1-2a. Attributes of 3D Reconstruction using all frames

- TTT.2.1-2b. Attributes of 3D Reconstruction using all frames (continued)

- TTT.2.2-1. Encoding of one 3D reconstruction from a sub-set of projection frames

- TTT.2.2-2. Attributes of 3D Reconstruction using every 5th frame

- TTT.2.3-1. Encoding of two 3D reconstructions of different regions of the anatomy

- TTT.2.3-2. Attributes of 3D Reconstruction of the full field of view of the projection frames

- TTT.2.3-3. Attributes of 3D Reconstruction using a sub-region of all frames

- TTT.2.4-1. Encoding of one 3D reconstruction from three rotational acquisitions in one instance

- TTT.2.4-2. Encoding of one 3D reconstruction from two rotational acquisitions in two instances

- TTT.2.4-3. Attributes of 3D Reconstruction using multiple rotation images

- TTT.2.5-1. Encoding of various 3D reconstructions at different cardiac phases

- TTT.2.5-2. Common Attributes of 3D Reconstruction of Three Cardiac Phases

- TTT.2.5-3. Per-Frame Attributes of 3D Reconstruction of Three Cardiac Phases

- TTT.2.6-1. Encoding of two 3D reconstructions at different steps of the intervention

- TTT.2.6-2. One frame of two 3D reconstructions at two different table positions

- TTT.2.6-3. Attributes of the pre-intervention 3D reconstruction

- TTT.2.6-4. Attributes of the post-intervention 3D reconstruction

- TTT.2.7-1. Rotational acquisition and the corresponding 3D reconstruction

- TTT.2.7-2. Static Enhanced XA acquisition at different table position

- TTT.2.7-3. Encoding of a 3D reconstruction and a registered 2D projection

- TTT.2.7-4. Image Position of the slice related to an application-defined patient coordinates

- TTT.2.7-5. Transformation from patient coordinates to Isocenter coordinates

- TTT.2.7-6. Transformation of the patient coordinates relative to the Isocenter coordinates

- TTT.2.7-7. Attributes of the pre-intervention 3D reconstruction

- TTT.2.7-8. Attributes of the Enhanced XA during the intervention

- UUU.1-1. Ultra-wide field image of a human retina in stereographic projection

- UUU.1.2-1. Stereographic projection example

- UUU.1.2-2. Image taken on-axis, i.e., centered on the fovea

- UUU.1.2-3. Image acquired superiorly-patient looking up

- UUU.1.2-4. Fovea in the center and clearly visible

- UUU.1.2-5. Fovea barely visible, but the transformation ensures it is still in the center

- UUU.1.2-6. Example of a polygon on the service of a sphere

- UUU.1.3-1. Map pixel to 3D coordinate

- UUU.1.3-2. Measure the Length of a Path

- UUU.2-1. Ophthalmic Tomography Image and Ophthalmic Optical Coherence Tomography B-scan Volume Analysis IOD Relationship - Simple Example

- UUU.2-2. Ophthalmic Tomography Image and Ophthalmic Optical Coherence Tomography B-scan Volume Analysis IOD Relationship - Complex Example

- UUU.3.1-1. Diabetic Macular Ischemia example

- UUU.3.1-2. Age related Macular Degeneration example

- UUU.3.1-3. Branch Retinal Vein Occlusion example

- UUU.3.2-1. Proliferative Diabetic Retinopathy example

- WWW-1. Two Example Track Sets. "Track Set Left" with two tracks, "Track Set Right" with one track.

- XXX.1-1. Scope of Volumetric Presentation States

- XXX.3.1-1. Simple Planar MPR Pipeline

- XXX.3.2-1. Three orthogonal MPR views. From left to right transverse, coronal, sagittal

- XXX.3.3-1. Definition of a range of oblique transverse Planar MPR views on sagittal view of head scan for creation of derived images

- XXX.3.3-2. One Volumetric Presentations States is created for each of the MPR views. The VPS Instances have the same value of Presentation Display Collection UID (0070,1101)

- XXX.3.4-1. Additional MPR views are generated by moving the view that is defined in the VPS in Animation Step Size (0070,1A05) steps perpendicular along the curve

- XXX.3.5-1. Needle trajectory on a Planar MPR view

- XXX.3.6-1. Planar MPR View with Lung Nodules Colorized by Category

- XXX.3.6-2. Planar MPR VPS Pipeline for Colorizing the Lung Nodule Categories

- XXX.3.6-3. Lung nodule example pipeline

- XXX.3.7-1. Planar MPR Views of an Ultrasound Color Flow Volume

- XXX.3.7-2. Planar MPR VPS Pipeline for Ultrasound Color Flow

- XXX.3.8-1. Blending with Functional Data

- XXX.3.8-2. Planar MPR VPS Pipeline for PET/CT Blending

- XXX.3.8-3. PET/CT Classification and Compositing Details

- XXX.3.9-1. Stent Stabilization

- XXX.3.10-1. Highlighted Areas of Interest Volume Rendered View Pipeline

- XXX.3.11-1. Colorized Volume Rendering of Segmented Volume Data Pipeline

- XXX.3.11-2. Segmented Volume Rendering Pipeline

- XXX.3.12-1. Liver Resection Planning Pipeline

- XXX.3.12-2. Multiple Volume Rendering Pipeline

- XXX.5-1. Weighting LUTs for Fixed Proportional Composting

- XXX.5-2. Weighting LUTs for Partially Transparent A Over B Compositing

- XXX.5-3. Weighting LUTs for Pass-Through Compositing

- XXX.5-4. Weighting LUTs for Threshold Composting

- XXX.6-1. One Input To P-Values Output

- XXX.6-2. One Input to PCS-Values Output

- XXX.6-3. Two Inputs to PCS-Values Output

- XXX.6-4. Three Inputs to PCS-Values Output

- XXX.6-5. VPS Display Pipeline Equivalent to the Enhanced Blending and Display Pipeline for P-Values

- XXX.6-6. VPS Display Pipeline Equivalent to the Enhanced Blending and Display Pipeline for PCS-Values

- AAAA.1.1-1. Protocol Storage Use Cases

- BBBB.1-1. Color Parametric Map on top of an anatomical image

- BBBB.1-2. Color Parametric Map with threshold applied on top of an anatomical image

- BBBB.1-3. Resulting Color LUT Spring

- DDDD.2-1. Matching Intended Quantity with Measurement Definition

- DDDD.2-2. Result of Unclear or Ambiguous Measurement Definition

- DDDD.3-1. Inadequate Definition of Non-Standard Measurement

- FFFF.2-1. Anatomical image

- FFFF.2-2. DTI image

- FFFF.2-3. Reading task image with coloring and threshold applied

- FFFF.2-4. Listening task image with coloring and threshold applied

- FFFF.2-5. Silent word generation task image with coloring and threshold applied

- FFFF.2-6. Blended result

- FFFF.2-7. Blended result with Patient and Series information

List of Tables

- C.6-1. Correspondence Between DICOM and HL7 Channel Definition

- K.4-1. Attributes That Convey Staged Protocol Related Information

- K.5-1. Staged Protocol Image Attributes Example

- K.5-2. Comparison Of Protocol And Extra-Protocol Image Attributes Example

- Q.2-1. Breast Image Report Content for Example 1

- Q.2-2. Breast Imaging Report Content for Example 2

- Q.2-3. Breast Imaging Report Content for Example 3

- Q.2-4. Breast Imaging Report Content for Example 4

- X.3-1. WADO Reference in an HL7 CDA <linkHtml>

- X.3-2. DICOM Study Reference in an HL7 V3 Act (CDA Act Entry)

- X.3-3. DICOM Series Reference in an HL7 V3 Act (CDA Act Entry)

- X.3-4. Modality Qualifier for The Series Act.Code

- X.3-5. DICOM Composite Object Reference in an HL7 V3 Act (CDA Observation Entry)

- X.3-6. WADO Reference in an HL7 DGIMG Observation.Text

- FF.3-1. Example #1 Report Encoding

- II-1. Contrast/Bolus Module Attribute Mapping

- II-2. Enhanced Contrast/Bolus Module Attribute Mapping

- II-3. Device Module Attribute Mapping

- II-4. Intervention Module Attribute Mapping

- NN.6-1. Specimen Module for Gross Specimen

- NN.6-2. Specimen Preparation Sequence for Gross Specimen

- NN.6-3. Specimen Module for a Slide

- NN.6-4. Specimen Preparation Sequence for Slide

- OO.1.1-1. Hanging Protocol Names for Dental Image Layout based on JSOMR classification

- QQ.1-1. Enhanced US Data Type Blending Examples (Informative)

- RR-1. Reference Table for Use with Traditional Charts

- RR-2. Reference Table for Use with ETDRS Charts or Equivalent

- YY-1. Graphic Annotation Module Attributes

- YY-2. Graphic Annotation Module Attributes

- YY-3. Graphic Group Module

- YY-4. Graphic Annotation Module Attributes

- ZZ.4-1. Attributes Used to Describe a Mono Stem Implant for Total Hip Replacement

- ZZ.4-2. Attributes Used to Describe a Mono Cup Implant for Total Hip Replacement

- ZZ.4-3. Attributes Used to Describe The Assembly of Cup and Stem

- AAA.3-1. Total Hip Replacement Example

- AAA.3-2. Dental Drilling Template Example

- FFF.2.1-1. Enhanced X-Ray Angiographic Image IOD Modules

- FFF.2.1-2. Enhanced XA Image Functional Group Macros

- FFF.2.1-3. Synchronization Module Recommendations

- FFF.2.1-4. Cardiac Synchronization Module Recommendations

- FFF.2.1-5. Enhanced XA/XRF Image Module Recommendations

- FFF.2.1-6. Frame Content Macro Recommendations

- FFF.2.1-7. General ECG IOD Modules

- FFF.2.1-8. General Series Module Recommendations

- FFF.2.1-9. Synchronization Module Recommendations

- FFF.2.1-10. Waveform Identification Module Recommendations

- FFF.2.1-11. Waveform Module Recommendations

- FFF.2.1-12. Enhanced X-Ray Angiographic Image IOD Modules

- FFF.2.1-13. Enhanced XA Image Functional Group Macros

- FFF.2.1-14. Synchronization Module Recommendations

- FFF.2.1-15. Frame Content Macro Recommendations

- FFF.2.1-16. Waveform IOD Modules

- FFF.2.1-18. Waveform Identification Module Recommendations

- FFF.2.1-19. Enhanced X-Ray Angiographic Image IOD Modules

- FFF.2.1-20. Enhanced XA Image Functional Group Macros

- FFF.2.1-21. Synchronization Module Recommendations

- FFF.2.1-22. Enhanced XA/XRF Image Module Recommendations

- FFF.2.1-23. Frame Content Macro Recommendations

- FFF.2.1-24. Waveform IOD Modules

- FFF.2.1-25. Synchronization Module Recommendations

- FFF.2.1-26. Waveform Identification Module Recommendations

- FFF.2.1-27. Waveform Module Recommendations

- FFF.2.1-28. Enhanced X-Ray Angiographic Image IOD Modules

- FFF.2.1-29. Enhanced XA Image Functional Group Macros

- FFF.2.1-30. XA/XRF Acquisition Module Example

- FFF.2.1-31. X-Ray Positioner Macro Example

- FFF.2.1-32. X-Ray Isocenter Reference System Macro Example

- FFF.2.1-33. Enhanced X-Ray Angiographic Image IOD Modules

- FFF.2.1-34. Enhanced XA Image Functional Group Macros

- FFF.2.1-35. XA/XRF Acquisition Module Example

- FFF.2.1-36. X-Ray Table Position Macro Example

- FFF.2.1-37. X-Ray Isocenter Reference System Macro Example

- FFF.2.1-38. Enhanced XA Image Functional Group Macros

- FFF.2.1-39. X-Ray Exposure Control Sensing Regions Macro Recommendations

- FFF.2.1-40. Enhanced X-Ray Angiographic Image IOD Modules

- FFF.2.1-41. Enhanced XA Image Functional Group Macros

- FFF.2.1-42. XA/XRF Acquisition Module Recommendations

- FFF.2.1-43. X-Ray Detector Module Recommendations

- FFF.2.1-44. X-Ray Field of View Macro Recommendations

- FFF.2.1-45. XA/XRF Frame Pixel Data Properties Macro Recommendations

- FFF.2.1-46. Enhanced X-Ray Angiographic Image IOD Modules

- FFF.2.1-47. Enhanced XA Image Functional Group Macros

- FFF.2.1-48. Contrast/Bolus Usage Macro Recommendations

- FFF.2.1-49. Enhanced X-Ray Angiographic Image IOD Modules

- FFF.2.1-50. Enhanced XA Image Functional Group Macros

- FFF.2.1-51. XA/XRF Acquisition Module Recommendations

- FFF.2.1-52. X-Ray Frame Acquisition Macro Recommendations

- FFF.2.2-1. Enhanced X-Ray Angiographic Image IOD Modules

- FFF.2.2-2. XA/XRF Multi-frame Presentation Module Recommendations

- FFF.2.3-1. Enhanced X-Ray Angiographic Image IOD Modules

- FFF.2.3-2. Enhanced XA Image Functional Group Macros

- FFF.2.3-3. Enhanced XA/XRF Image Module Recommendations

- FFF.2.3-4. Enhanced X-Ray Angiographic Image IOD Modules

- FFF.2.3-5. Mask Module Recommendations

- FFF.2.3-6. Enhanced X-Ray Angiographic Image IOD Modules

- FFF.2.3-7. Enhanced XA Image Functional Group Macros

- FFF.2.3-8. Mask Module Recommendations

- FFF.2.3-9. Frame Pixel Shift Macro Recommendations

- FFF.2.4-1. Enhanced X-Ray Angiographic Image IOD Modules

- FFF.2.4-2. Enhanced XA Image Functional Group Macros

- FFF.2.4-3. XA/XRF Acquisition Module Recommendations

- FFF.2.4-4. XA/XRF Frame Pixel Data Properties Macro Recommendations

- FFF.2.4-5. X-Ray Projection Pixel Calibration Macro Recommendations

- FFF.2.4-6. Enhanced X-Ray Angiographic Image IOD Modules

- FFF.2.4-7. Enhanced XA Image Functional Group Macros

- FFF.2.4-8. Enhanced XA/XRF Image Module Recommendations

- FFF.2.4-9. Derivation Image Macro Recommendations

- FFF.2.4-10. XA/XRF Frame Characteristics Macro Recommendations

- FFF.2.4-11. XA/XRF Frame Pixel Data Properties Macro Recommendations

- FFF.2.5-1. Enhanced X-Ray Angiographic Image IOD Modules

- FFF.2.5-2. Enhanced XA Image Functional Group Macros

- FFF.2.5-3. XA/XRF Acquisition Module Recommendations

- GGG.1-1. SOP Classes for Typical Implementation Examples

- HHH.1-1. Summary of DICOM/Rendered URI Based WADO Parameters

- PPP.3.1-1. N-GET Request/Response Example

- PPP.3.1-2. Example of N-GET Request/Response for QA Result Module

- PPP.3.2-1. N-GET Request/Response Example

- RRR.1-1. Volumetric ROI on CT Example

- RRR.2-1. Volumetric ROI on CT Example

- RRR.3-1. Planar ROI on DCE-MR Example

- RRR.4-1. SUV ROI on FDG PET Example

- SSS.1-1. Image Library for PET-CT Example

- TTT.2.1-1. General and Enhanced Series Modules Recommendations

- TTT.2.1-2. Frame of Reference Module Recommendations

- TTT.2.1-3. Enhanced Contrast/Bolus Module Recommendations

- TTT.2.1-4. Multi-frame Dimensions Module Recommendations

- TTT.2.1-5. Patient Position to Orientation Conversion Recommendations

- TTT.2.1-6. X-Ray 3D Image Module Recommendations

- TTT.2.1-7. X-Ray 3D Angiographic Image Contributing Sources Module Recommendations

- TTT.2.1-8. X-Ray 3D Angiographic Acquisition Module Recommendations

- TTT.2.1-9. Frame Content Macro Recommendations

- TTT.2.2-1. X-Ray 3D Angiographic Acquisition Module Recommendations

- TTT.2.2-2. Frame Content Macro Recommendations

- TTT.2.3-1. Frame of Reference Module Recommendations

- TTT.2.3-2. Pixel Measures Macro Recommendations

- TTT.2.3-3. Frame Content Macro Recommendations

- TTT.2.4-1. Frame of Reference Module Recommendations

- TTT.2.4-2. X-Ray 3D Angiographic Image Contributing Sources Module Recommendations

- TTT.2.4-3. X-Ray 3D Angiographic Acquisition Module Recommendations

- TTT.2.4-4. Frame Content Macro Recommendations

- TTT.2.5-1. Multi-frame Dimension Module Recommendations

- TTT.2.5-2. X-Ray 3D Angiographic Acquisition Module Recommendations

- TTT.2.5-3. X-Ray 3D Reconstruction Module Recommendations

- TTT.2.5-4. Frame Content Macro Recommendations

- TTT.2.5-5. Cardiac Synchronization Macro Recommendations

- TTT.2.6-1. Frame of Reference Module Recommendations

- TTT.2.6-2. Pixel Measures Macro Recommendations

- TTT.2.7-1. Image-Equipment Coordinate Relationship Module Recommendations

- WWW-1. Example of the Tractography Results Module

- XXX.3.1-1. Volumetric Presentation State Relationship Module Recommendations

- XXX.3.1-2. Volumetric Presentation State Display Module Recommendations

- XXX.3.2-1. Volumetric Presentation State Identification Module Recommendations

- XXX.3.2-2. Volumetric Presentation State Relationship Module Recommendations

- XXX.3.2-3. Presentation View Description Module Recommendations

- XXX.3.3-1. Volumetric Presentation State Identification Module Recommendations

- XXX.3.4-1. Presentation Animation Module Recommendations

- XXX.3.5-1. Volumetric Graphic Annotation Module Recommendations

- XXX.3.6-1. Volumetric Presentation State Relationship Module Recommendations

- XXX.3.6-2. Volumetric Presentation State Cropping Module Recommendations

- XXX.3.6-3. Volumetric Presentation State Display Module Recommendations

- XXX.3.7-1. Volumetric Presentation State Relationship Module Recommendations

- XXX.3.7-2. Presentation View Description Module Recommendations

- XXX.3.7-3. Multi-Planar Reconstruction Geometry Module Recommendations

- XXX.3.7-4. Volumetric Presentation State Display Module Recommendations

- XXX.3.8-1. Volumetric Presentation State Relationship Module Recommendations

- XXX.3.8-3. Volumetric Presentation State Display Module Recommendations

- XXX.3.9-1. Volumetric Presentation State Identification Module Recommendations

- XXX.3.9-2. Volumetric Presentation State Relationship Module Recommendations

- XXX.3.9-3. Presentation View Description Module Recommendations

- XXX.3.9-4. Presentation Animation Module Recommendations

- XXX.3.10.3.1-1. Volume Presentation State Relationship Module Recommendations

- XXX.3.10.3.2-1. Volume Render Geometry Module Recommendations

- XXX.3.10.3.3-1. Render Shading Module Recommendations

- XXX.3.10.3.4-1. Render Display Module Recommendations

- XXX.3.10.3.5-1. Volumetric Graphic Annotation Module Recommendations

- XXX.3.10.3.6-1. Graphic Layer Module Recommendations

- XXX.3.11.3.1-1. Volumetric Presentation State Relationship Module Recommendations

- XXX.3.11.3.2-1. Volume Render Geometry Module Recommendations

- XXX.3.11.3.3-1. Render Shading Module Recommendations

- XXX.3.11.3.4-1. Render Display Module Recommendations

- XXX.3.12.3.1-1. Volumetric Presentation State Relatonship Module Recommendations

- XXX.3.12.3.2-1. Volume Cropping Module Recommendations

- XXX.3.12.3.3-1. Volume Cropping Module Recommendations

- XXX.3.12.3.4-1. Render Shading Module Recommendations

- XXX.3.12.3.5-1. Render Display Module Recommendations

- XXX.4.1-1. Hanging Protocol Image Set Sequence Recommendations

- ZZZ.1-1. Content Assessment Results Module Example of a RT Plan Treatment Assessment

- AAAA.2-1. Routine Adult Head - Context

- AAAA.2-2. Routine Adult Head - Details - Scantech

- AAAA.2-2a. Patient Specification

- AAAA.2-2b. First Acquisition Protocol Element Specification

- AAAA.2-2c. Second Acquisition Protocol Element Specification

- AAAA.2-2d. First Reconstruction Protocol Element Specification

- AAAA.2-3. AAPM Routine Brain Details - Acme

- AAAA.2-3a. Patient Specification

- AAAA.2-3b. First Acquisition Protocol Element Specification

- AAAA.2-3c. Second Acquisition Protocol Element Specification

- AAAA.2-3d. Third Acquisition Protocol Element Specification

- AAAA.2-3e. First Reconstruction Protocol Element Specification

- AAAA.2-3f. Second Reconstruction Protocol Element Specification

- AAAA.2-3g. First Storage Protocol Element Specification

- AAAA.2-3h. Second Storage Protocol Element Specification

- AAAA.2-3i. Third Storage Protocol Element Specification

- AAAA.3-1. CT Tumor Volumetric Measurement - Context

- AAAA.3-2. CT Tumor Volumetric Measurement - Details - Acme

- AAAA.3-2a. First Acquisition Protocol Element Specification

- AAAA.3-2b. Second Acquisition Protocol Element Specification

- AAAA.3-2c. First Reconstruction Protocol Element Specification

- BBBB.2-1. Example data for the Floating Point Image Pixel Module

- BBBB.2-2. Example data for the Dimension Organization Module

- BBBB.2-3. Example data for the Pixel Measures Macro

- BBBB.2-4. Example data for the Frame Content Macro

- BBBB.2-5. Example data for the Identity Pixel Value Transformation Macro

- BBBB.2-6. Example data for the Frame VOI LUT With LUT Macro

- BBBB.2-7. Example data for the Real World Value Mapping Macro

- BBBB.2-8. Example data for the Palette Color Lookup Table Module

- BBBB.2-9. Example data for the Stored Value Color Range Macro

- BBBB.2-10. Example data for the Parametric Map Frame Type Macro

- FFFF.3-1. Encoding Example

- GGGG.1-1. Skin Dose Map Example

- GGGG.2-1. Dual-source CT Organ Radiation Dose Example

- HHHH-1. Approval by Chief Radiologist

- IIII.1-1. CT Derived Encapsulated STL Example

- IIII.2-1. Fused CT/MR Derived Encapsulated STL Example

List of Examples

- Q.2-1. Report Sample: Narrative Text Only

- Q.2-2. Report Sample: Narrative Text with Minimal Supplementary Data

- Q.2-3. Report Sample: Narrative Text with More Extensive Supplementary Data

- Q.2-4. Report Sample: Multiple Procedures, Narrative Text with Some Supplementary Data

- BB.1-1. Simple Example of Print Management SCU Session

- FF.3-1. Presentation of Report Example #1

- WW.1-1. Sample Audit Event Report

The information in this publication was considered technically sound by the consensus of persons engaged in the development and approval of the document at the time it was developed. Consensus does not necessarily mean that there is unanimous agreement among every person participating in the development of this document.

NEMA standards and guideline publications, of which the document contained herein is one, are developed through a voluntary consensus standards development process. This process brings together volunteers and/or seeks out the views of persons who have an interest in the topic covered by this publication. While NEMA administers the process and establishes rules to promote fairness in the development of consensus, it does not write the document and it does not independently test, evaluate, or verify the accuracy or completeness of any information or the soundness of any judgments contained in its standards and guideline publications.

NEMA disclaims liability for any personal injury, property, or other damages of any nature whatsoever, whether special, indirect, consequential, or compensatory, directly or indirectly resulting from the publication, use of, application, or reliance on this document. NEMA disclaims and makes no guaranty or warranty, expressed or implied, as to the accuracy or completeness of any information published herein, and disclaims and makes no warranty that the information in this document will fulfill any of your particular purposes or needs. NEMA does not undertake to guarantee the performance of any individual manufacturer or seller's products or services by virtue of this standard or guide.

In publishing and making this document available, NEMA is not undertaking to render professional or other services for or on behalf of any person or entity, nor is NEMA undertaking to perform any duty owed by any person or entity to someone else. Anyone using this document should rely on his or her own independent judgment or, as appropriate, seek the advice of a competent professional in determining the exercise of reasonable care in any given circumstances. Information and other standards on the topic covered by this publication may be available from other sources, which the user may wish to consult for additional views or information not covered by this publication.

NEMA has no power, nor does it undertake to police or enforce compliance with the contents of this document. NEMA does not certify, test, or inspect products, designs, or installations for safety or health purposes. Any certification or other statement of compliance with any health or safety-related information in this document shall not be attributable to NEMA and is solely the responsibility of the certifier or maker of the statement.

This DICOM Standard was developed according to the procedures of the DICOM Standards Committee.

The DICOM Standard is structured as a multi-part document using the guidelines established in [ISO/IEC Directives, Part 2].

PS3.1 should be used as the base reference for the current parts of this standard.

DICOM® is the registered trademark of the National Electrical Manufacturers Association for its standards publications relating to digital communications of medical information, all rights reserved.

HL7® and CDA® are the registered trademarks of Health Level Seven International, all rights reserved.

SNOMED®, SNOMED Clinical Terms®, SNOMED CT® are the registered trademarks of the International Health Terminology Standards Development Organisation (IHTSDO), all rights reserved.

LOINC® is the registered trademark of Regenstrief Institute, Inc, all rights reserved.

This part of the DICOM Standard contains explanatory information in the form of Normative and Informative Annexes.

The following standards contain provisions which, through reference in this text, constitute provisions of this Standard. At the time of publication, the editions indicated were valid. All standards are subject to revision, and parties to agreements based on this Standard are encouraged to investigate the possibilities of applying the most recent editions of the standards indicated below.

2.1 International Organization for Standardization (ISO) and International Electrotechnical Commission (IEC)

[ISO/IEC Directives, Part 2] 2016/05. 7.0. Rules for the structure and drafting of International Standards. http://www.iec.ch/members_experts/refdocs/iec/isoiecdir-2%7Bed7.0%7Den.pdf .

Terms listed in Section 3 are capitalized throughout the document.

This Annex was formerly located in Annex E “Explanation of Patient Orientation (Normative)” in PS3.3 in the 2003 and earlier revisions of the standard.

This Annex provides an explanation of how to use the patient orientation data elements.

As for the hand, the direction labels are based on the foot in the standard anatomic position. For the right foot, for example, RIGHT will be in the direction of the 5th toe. This assignment will remain constant through movement or positioning of the extremity. This is also true of the HEAD and FOOT directions.

This Annex was formerly located in Annex G “Integration of Modality Worklist and Modality Performed Procedure Step in the Original DICOM Standard (Informative)” in PS3.3 in the 2003 and earlier revisions of the standard.

DICOM was published in 1993 and effectively addresses image communication for a number of modalities and Image Management functions for a significant part of the field of medical imaging. Since then, many additional medical imaging specialties have contributed to the extension of the DICOM Standard and developed additional Image Object Definitions. Furthermore, there have been discussions about the harmonization of the DICOM Real-World domain model with other standardization bodies. This effort has resulted in a number of extensions to the DICOM Standard. The integration of the Modality Worklist and Modality Performed Procedure Step address an important part of the domain area that was not included initially in the DICOM Standard. At the same time, the Modality Worklist and Modality Performed Procedure Step integration make steps in the direction of harmonization with other standardization bodies (CEN TC 251, HL7, etc.).

The purpose of this Annex is to show how the original DICOM Standard relates to the extension for Modality Worklist Management and Modality Performed Procedure Step. The two included figures outline the void filled by the Modality Worklist Management and Modality Performed Procedure Step specification, and the relationship between the original DICOM Data Model and the extended model.

Figure B-1. Functional View - Modality Worklist and Modality Performed Procedure Step Management in the Context of DICOM Service Classes

The management of a patient starts when the patient enters a physical facility (e.g., a hospital, a clinic, an imaging center) or even before that time. The DICOM Patient Management SOP Class provides many of the functions that are of interest to imaging departments. Figure B-1 is an example where one presumes that an order for a procedure has been issued for a patient. The order for an imaging procedure results in the creation of a Study Instance within the DICOM Study Management SOP Class. At the same time (A) the Modality Worklist Management SOP Class enables a modality operator to request the scheduling information for the ordered procedures. A worklist can be constructed based on the scheduling information. The handling of the requested imaging procedure in DICOM Study Management and in DICOM Worklist Management are closely related. The worklist also conveys patient/study demographic information that can be incorporated into the images.

Worklist Management is completed once the imaging procedure has started and the Scheduled Procedure Step has been removed from the Worklist, possibly in response to the Modality Performed Procedure Step (B). However, Study Management continues throughout all stages of the Study, including interpretation. The actual procedure performed (based on the request) and information about the images produced are conveyed by the DICOM Study Component SOP Class or the Modality Performed Procedure Step SOP Classes.

Figure B-2. Relationship of the Original Model and the Extensions for Modality Worklist and Modality Performed Procedure Step Management

Figure B-2 shows the relationship between the original DICOM Real-World model and the extensions of this Real-World model required to support the Modality Worklist and the Modality Performed Procedure Step. The new parts of the model add entities that are needed to request, schedule, and describe the performance of imaging procedures, concepts that were not supported in the original model. The entities required for representing the Worklist form a natural extension of the original DICOM Real-World model.

Common to both the original model and the extended model is the Patient entity. The Service Episode is an administrative concept that has been shown in the extended model in order to pave the way for future adaptation to a common model supported by other standardization groups including HL7, CEN TC 251 WG 3, CAP-IEC, etc. The Visit is in the original model but not shown in the extended model because it is a part of the Service Episode.

There is a 1 to 1 relationship between a Requested Procedure and the DICOM Study (A). A DICOM Study is the result of a single Requested Procedure. A Requested Procedure can result in only one Study.

A n:m relationship exists between a Scheduled Procedure Step and a Modality Performed Procedure Step (B). The concept of a Modality Performed Procedure Step is a superset of the Study Component concept contained in the original DICOM model. The Modality Performed Procedure Step SOP Classes provide a means to relate Modality Performed Procedure Steps to Scheduled Procedure Steps.

This Annex was formerly located in Annex J “Waveforms (Informative)” in PS3.3 in the 2003 and earlier revisions of the standard.

Waveform acquisition is part of both the medical imaging environment and the general clinical environment. Because of its broad use, there has been significant previous and complementary work in waveform standardization of which the following are particularly important:

- ASTM E31.16 - E1467

-

Specification for Transferring Digital Neurophysiological Data Between Independent Computer Systems

- CEN TC251 PT5-007 - prENV1064 draft

-

Standard Communications Protocol for Computer-Assisted Electrocardiography (SCP-ECG).

- CEN TC251 PT5-021 - draft

- HL7 Automated Data SIG

- IEEE P1073 - draft

- DICOM Section A.10 in PS3.3

For DICOM, the domain of waveform standardization is waveform acquisition within the imaging context. It is specifically meant to address waveform acquisitions that will be analyzed with other data that is transferred and managed using the DICOM protocol. It allows the addition of waveform data to that context with minimal incremental cost. Further, it leverages the DICOM persistent object capability for maintaining referential relationships to other data collected in a multi-modality environment, including references necessary for multi-modality synchronization.

Waveform interchange in other clinical contexts may use different protocols more appropriate to those domains. In particular, HL7 may be used for transfer of waveform observations to general clinical information systems, and MIB may be used for real-time physiological monitoring and therapy.

The waveform information object definition in DICOM has been specifically harmonized at the semantic level with the HL7 waveform message format. The use of a common object model allows straightforward transcoding and interoperation between systems that use DICOM for waveform interchange and those that use HL7, and may be viewed as an example of common semantics implemented in the differing syntaxes of two messaging systems.

Note

HL7 allows transport of DICOM SOP Instances (information objects) encapsulated within HL7 messages. Since the DICOM and HL7 waveform semantics are harmonized, DICOM Waveform SOP Instances need not be transported as encapsulated data, as they can be transcoded to native HL7 Waveform Observation format.

The following are specific use case examples for waveforms in the imaging environment.

-

Case 1: Catheterization Laboratory - During a cardiac catheterization, several independent pieces of data acquisition equipment may be brought together for the exam. An electrocardiographic subsystem records surface ECG waveforms; an X-ray angiographic subsystem records motion images; a hemodynamic subsystem records intracardiac pressures from a sensor on the catheter. These subsystems send their acquired data by network to a repository. These data are assembled at an analytic workstation by retrieving from the repository. For a left ventriculographic procedure, the ECG is used by the physician to determine the time of maximum and minimum ventricular fill, and when coordinated with the angiographic images, an accurate estimate of the ejection fraction can be calculated. For a valvuloplasty procedure, the hemodynamic waveforms are used to calculate the pre-intervention and post-intervention pressure gradients.

-

Case 2: Electrophysiology Laboratory - An electrophysiological exam will capture waveforms from multiple sensors on a catheter; the placement of the catheter in the heart is captured on an angiographic image. At an analytic workstation, the exact location of the sensors can thus be aligned with a model of the heart, and the relative timing of the arrival of the electrophysiological waves at different cardiac locations can be mapped.

-

Case 3: Stress Exam - A stress exam may involve the acquisition of both ECG waveforms and echocardiographic ultrasound images from portable equipment at different stages of the test. The waveforms and the echocardiograms are output on an interchange disk, which is then input and read at a review station. The physician analyzes both types of data to make a diagnosis of cardiac health.

Synchronization of acquisition across multiple modalities in a single study (e.g., angiography and electrocardiography) requires either a shared trigger, or a shared clock. A Synchronization Module within the Frame of Reference Information Entity specifies the synchronization mechanism. A common temporal environment used by multiple equipment is identified by a shared Synchronization Frame of Reference UID. How this UID is determined and distributed to the participating equipment is outside the scope of the standard.

The method used for time synchronization of equipment clocks is implementation or site specific, and therefore outside the scope of this proposal. If required, standard time distribution protocols are available (e.g., NTP, IRIG, GPS).

An informative description of time distribution methods can be found at: http://www.bancomm.com/cntpApp.htm